对于谷粒商城的高级篇笔记总结…

ELASTICSEARCH 简介 https://www.elastic.co/cn/what-is/elasticsearch

Elastic 的底层是开源库 Lucene。但是,你没法直接用 Lucene,必须自己写代码去调用它的接口。Elastic 是 Lucene 的封装,提供了 REST API 的操作接口,开箱即用。https://www.elastic.co/guide/en/elasticsearch/reference/current/index.html https://www.elastic.co/guide/cn/elasticsearch/guide/current/foreword_id.html

社区中文:https://es.xiaoleilu.com/index.html http://doc.codingdict.com/elasticsearch/0/

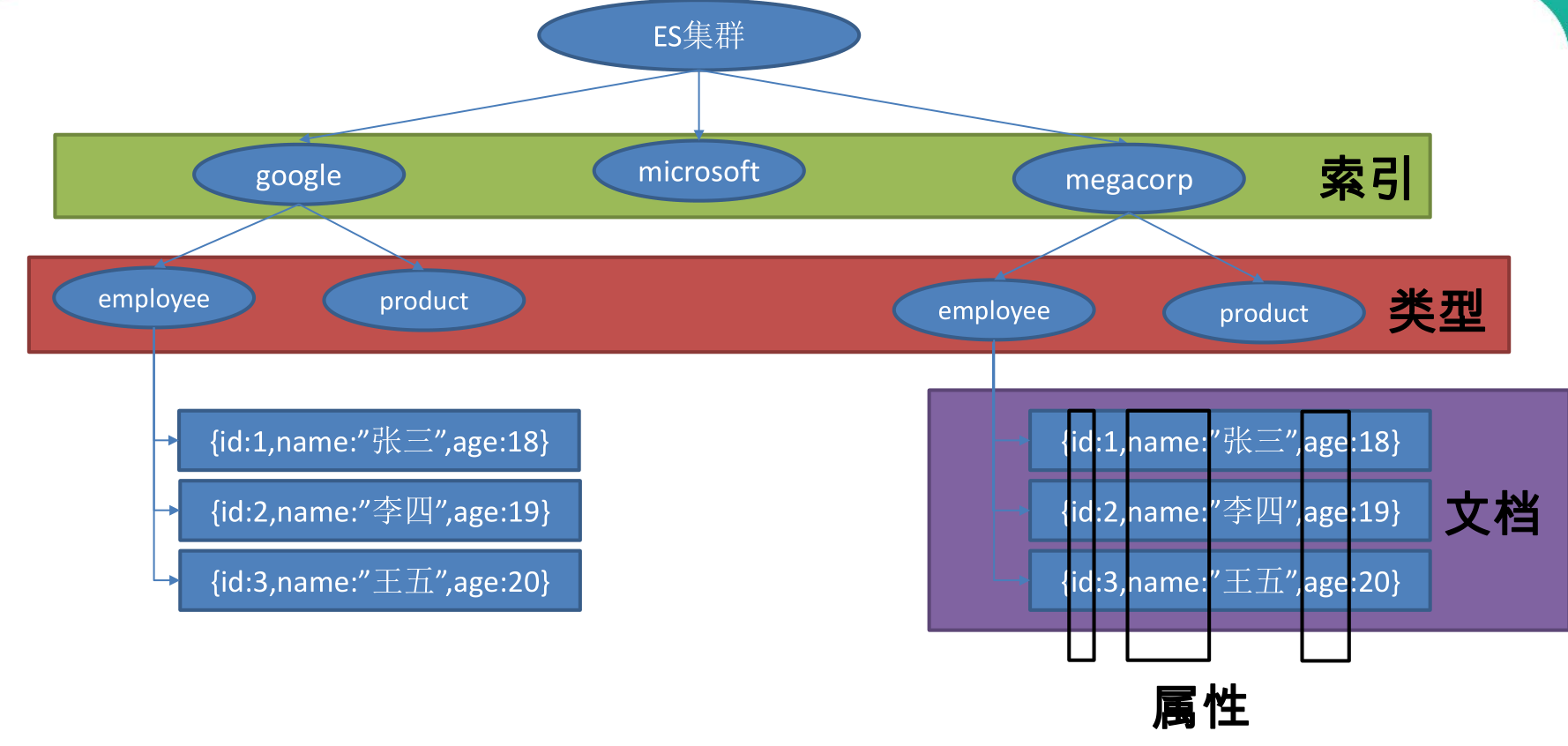

基本概念 1、Index(索引)

动词,相当于 MySQL 中的 insert;

名词,相当于 MySQL 中的 Database

2、Type(类型)

3、Document(文档)

ElasticSearch7-去掉type概念

关系型数据库中两个数据表示是独立的,即使他们里面有相同名称的列也不影响使用,但ES中不是这样的。elasticsearch是基于Lucene开发的搜索引擎,而ES中不同type下名称相同的filed最终在Lucene中的处理方式是一样的。

两个不同type下的两个user_name,在ES同一个索引下其实被认为是同一个filed,你必须在两个不同的type中定义相同的filed映射。否则,不同type中的相同字段名称就会在处理中出现冲突的情况,导致Lucene处理效率下降。

去掉type就是为了提高ES处理数据的效率。

Elasticsearch 7.x

URL中的type参数为可选。比如,索引一个文档不再要求提供文档类型

Elasticsearch 8.x

解决:将索引从多类型迁移到单类型,每种类型文档一个独立索引

倒排索引

1、安装elastic search dokcer中安装elastic search

(1)下载ealastic search(存储和检索数据)和kibana(可视化检索数据)

1 2 docker pull elasticsearch:7.6.2 docker pull kibana:7.6.2

(2)配置

1 2 3 4 mkdir -p /mydata/elasticsearch/config mkdir -p /mydata/elasticsearch/data echo "http.host: 0.0.0.0" >> /mydata/elasticsearch/config/elasticsearch.yml chmod -R 777 /mydata/elasticsearch/

(3)启动Elastic search

1 2 3 4 5 6 7 docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \ -e "discovery.type=single-node" \ -e ES_JAVA_OPTS="-Xms64m -Xmx512m" \ -v /mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \ -v /mydata/elasticsearch/data:/usr/share/elasticsearch/data \ -v /mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins \ -d elasticsearch:7.6.2

–name:设置容器名称

-p:9200是发送http请求,rustAPI,9300是ES在分布式集群状态下节点间的通信端口

-e:运行模式,ES_JAVA_OPTS不指定会将内存全部占用

-v:进行挂载,将容器中的配置文件和外部的虚拟机配置文件进行关联

设置开机启动elasticsearch

1 docker update elasticsearch --restart=always

(4)启动kibana:

1 docker run --name kibana -e ELASTICSEARCH_HOSTS=http://192.168.56.10:9200 -p 5601:5601 -d kibana:7.6.2

设置开机启动kibana

1 docker update kibana --restart=always

(5)测试

查看elasticsearch版本信息: http://192.168.56.10:9200

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 { "name" : "1e3900cda632" , "cluster_name" : "elasticsearch" , "cluster_uuid" : "zAxedSGQSgC86bmYA72C9Q" , "version" : { "number" : "7.6.2" , "build_flavor" : "default" , "build_type" : "docker" , "build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f" , "build_date" : "2020-03-26T06:34:37.794943Z" , "build_snapshot" : false , "lucene_version" : "8.4.0" , "minimum_wire_compatibility_version" : "6.8.0" , "minimum_index_compatibility_version" : "6.0.0-beta1" } , "tagline" : "You Know, for Search" }

显示elasticsearch 节点信息http://#:9200/_cat/nodes ,

1 127.0 .0 .1 13 92 7 0.06 0.21 0.20 dilm * 1e3900 cda632

访问Kibana:http://192.168.56.10:5601/app/kibana#/home

2、初步检索 1)_CAT (1)GET/_cat/nodes:查看所有节点

如:http://192.168.56.10:9200/_cat/nodes :

1 127 .0 .0 .1 15 91 3 0 .13 0 .38 0 .31 dilm * 1 e3900cda632

注:*表示集群中的主节点

(2)GET/_cat/health:查看es健康状况

如: http://192.168.56.10:9200/_cat/health

1 1648604850 01 :47 :30 elasticsearch green 1 1 3 3 0 0 0 0 - 100 .0 %

注:green表示健康值正常

(3)GET/_cat/master:查看主节点

如: http://192.168.56.10:9200/_cat/master

1 Urxz2dOfSgCRyzGzs -7 l6Q 127.0.0.1 127.0.0.1 1 e3900cda632

(4)GET/_cat/indicies:查看所有索引 ,等价于mysql数据库的show databases;

如: http://192.168.56.10:9200/_cat/indices

1 2 3 green open .kibana_task_manager_1 X9B74aaIS9KHLlPUrYLVWA 1 0 2 0 34.2 kb 34.2 kb green open .apm-agent-configuration ZXdJradmQcG-fbLFmRydKw 1 0 0 0 283 b 283 b green open .kibana_1 9 uZjKicuSPqv5qUSMWes3Q 1 0 7 0 34.5 kb 34.5 kb

2)索引一个文档 保存一个数据,保存在哪个索引的哪个类型下(相当于保存在那个数据库的那张表上),指定用那个唯一标识

PUT和POST都可以POST新增。 如果不指定id,会自动生成id。指定id就会修改这个数据,并新增版本号;PUT可以新增也可以修改。PUT必须指定id ;由于PUT需要指定id,我们一般用来做修改操作,不指定id会报错。

下面是在postman中的测试数据:

创建数据成功后,显示201 created表示插入记录成功。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 { "_index" : "customer" , "_type" : "external" , "_id" : "1" , "_version" : 1 , "result" : "created" , "_shards" : { "total" : 2 , "successful" : 1 , "failed" : 0 } , "_seq_no" : 0 , "_primary_term" : 1 }

这些返回的JSON串的含义;这些带有下划线开头的,称为元数据,反映了当前的基本信息。

“_index”: “customer” 表明该数据在哪个数据库下;

“_type”: “external” 表明该数据在哪个类型下;

“_id”: “1” 表明被保存数据的id;

“_version”: 1, 被保存数据的版本

“result”: “created” 这里是创建了一条数据,如果重新put一条数据,则该状态会变为updated,并且版本号也会发生变化。

下面选用POST方式:

添加数据的时候,不指定ID,会自动的生成id,并且类型是新增:

再次使用POST插入数据,仍然是新增的:

添加数据的时候,指定ID,会使用该id,并且类型是新增:

再次使用POST插入数据,类型为updated

3)查看文档 GET /customer/external/1

http://192.168.56.10:9200/customer/external/1

1 2 3 4 5 6 7 8 9 10 11 12 { "_index" : "customer" , "_type" : "external" , "_id" : "1" , "_version" : 2 , "_seq_no" : 1 , "_primary_term" : 1 , "found" : true , "_source" : { "name" : "John Doe" } }

通过“if_seq_no=1&if_primary_term=1 ”,当序列号匹配的时候,才进行修改,否则不修改。

实例:将id=1的数据更新为name=1,然后再次更新为name=2,起始_seq_no=1,_primary_term=1

(1)将name更新为1

http://192.168.56.10:9200/customer/external/1?if_seq_no=1&if_primary_term=1

(2)将name更新为2,更新过程中使用seq_no=1

http://#:9200/customer/external/1?if_seq_no=1&if_primary_term=1

出现更新错误。

(3)查询新的数据

http://192.168.56.10:9200/customer/external/1

能够看到_seq_no变为7。(ps.中间有多次更新操作,这里就从seq_no为7来接着操作)

(4)再次更新,更新成功

http://192.168.56.10:9200/customer/external/1?if_seq_no=7&if_primary_term=1

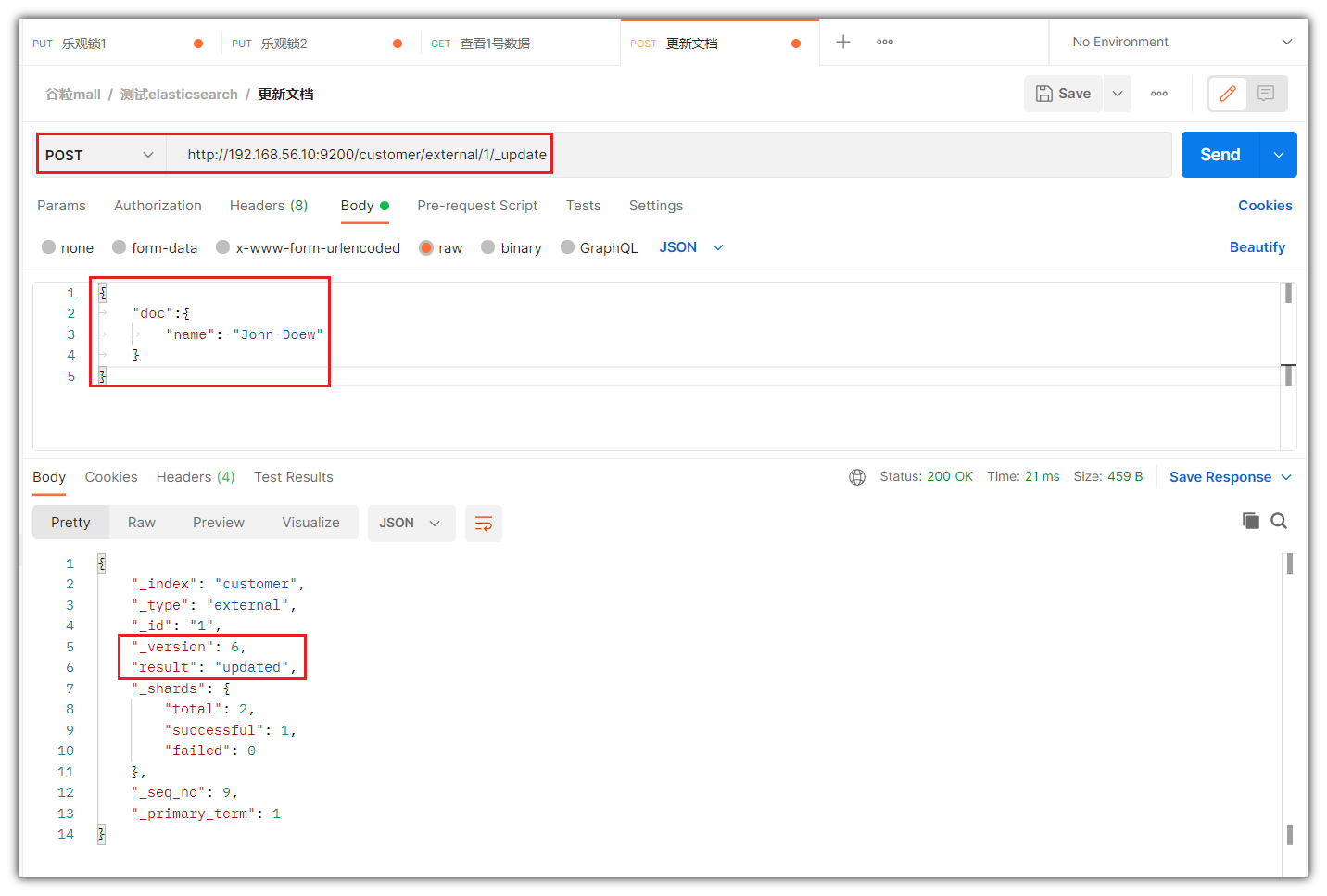

4)更新文档 (1)POST更新文档,带有_update

1 2 3 4 5 6 POST customer/external/1 /_update { "doc" : { "name" : "John Doew" } }

http://192.168.56.10:9200/customer/external/1/_update

如果再次执行更新,则不执行任何操作,序列号也不发生变化

POST更新方式,会对比原来的数据,和原来的相同,则不执行任何操作(version和_seq_no)都不变。

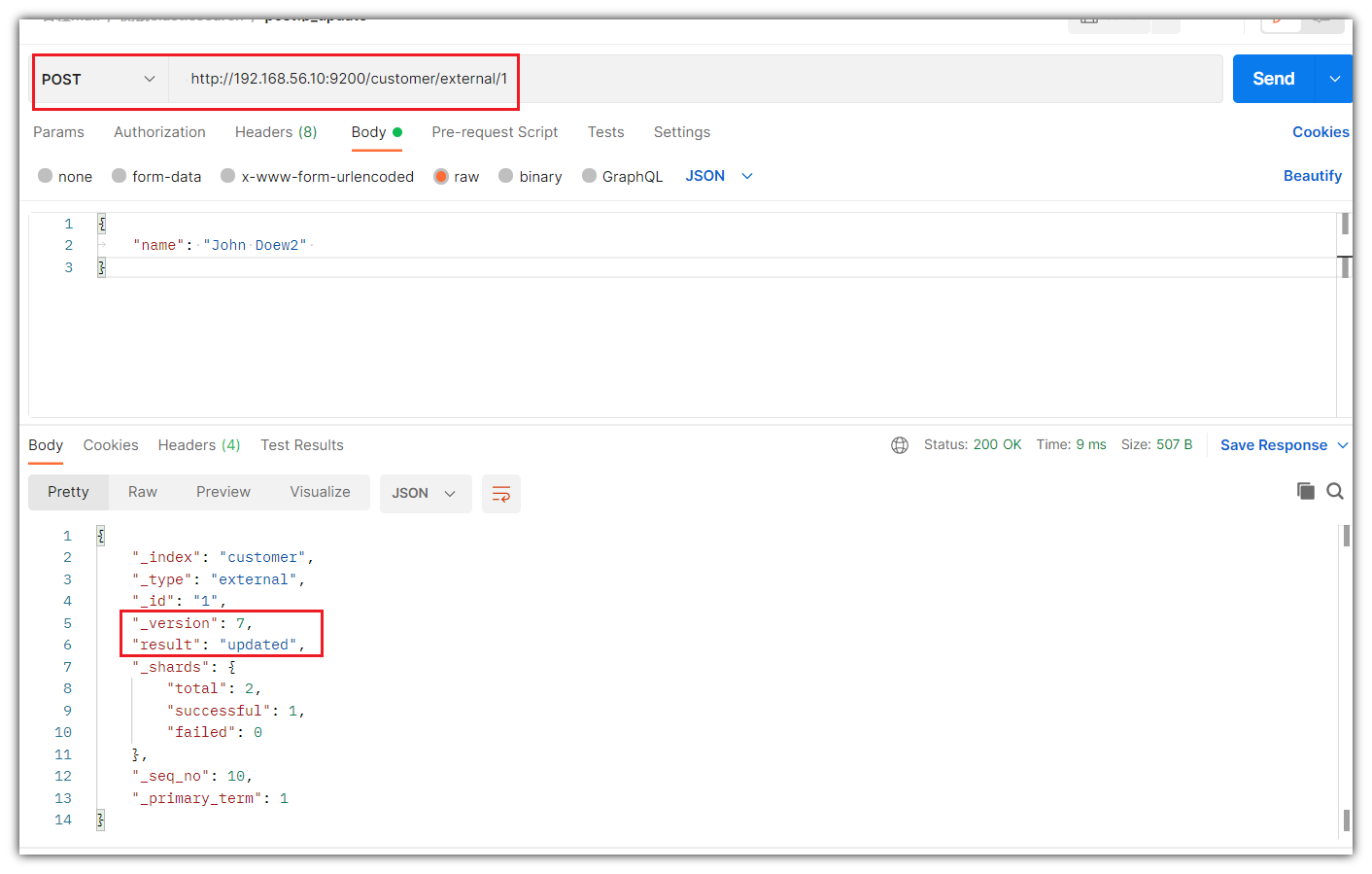

(2)POST更新文档,不带_update

1 2 3 4 POST customer/external/1 { "name" : "John Doew2" }

在更新过程中,重复执行更新操作,数据也能够更新成功 ,不会和原来的数据进行对比。

(3)PUT更新文档,无_update

1 2 3 4 PUT customer/external/1 { "name" : "John Doew3" }

在更新过程中,重复执行更新操作,数据也能够更新成功 ,不会和原来的数据进行对比。

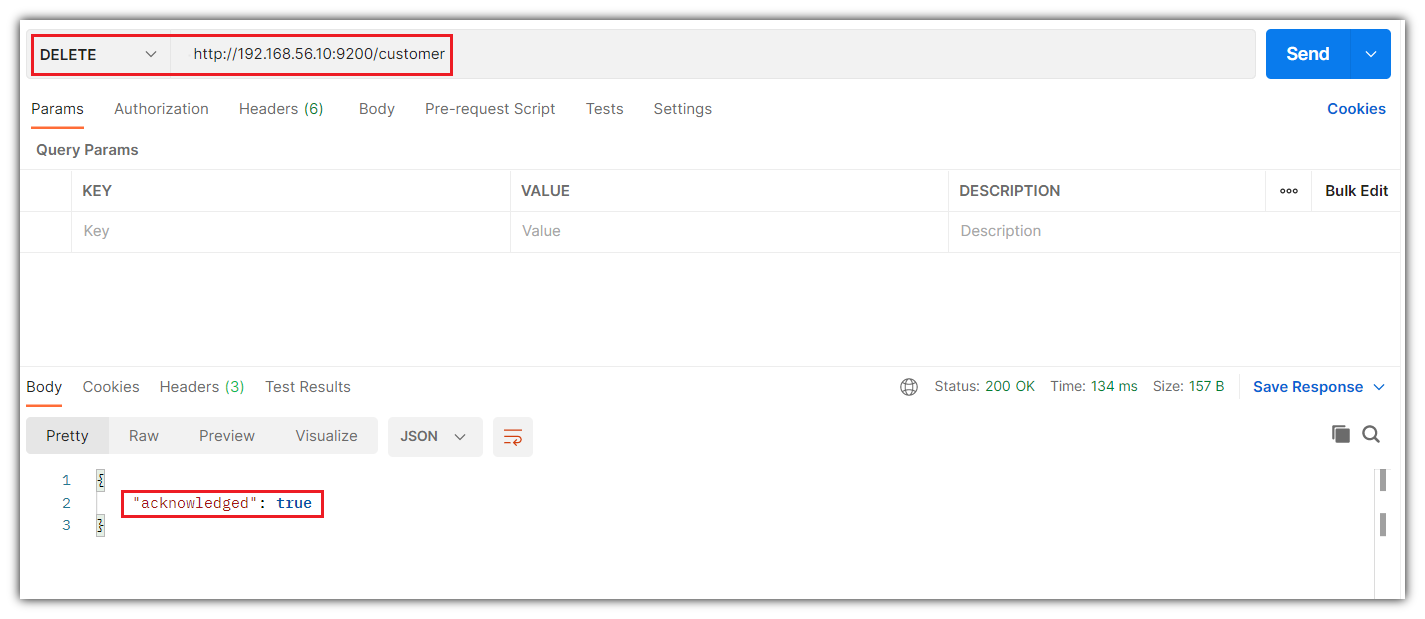

5)删除文档或索引 1 2 DELETE customer/external/1 DELETE customer

注:elasticsearch并没有提供删除类型的操作,只提供了删除索引和文档的操作。

实例:删除id=1的数据,删除后继续查询

实例:删除整个costomer索引数据

删除前,所有的索引

1 2 3 4 green open .kibana_task_manager_1 X9B74aaIS9KHLlPUrYLVWA 1 0 2 0 34.2kb 34.2kb green open .apm-agent-configuration ZXdJradmQcG-fbLFmRydKw 1 0 0 0 283b 283b green open .kibana_1 9uZjKicuSPqv5qUSMWes3Q 1 0 7 0 34.5kb 34.5kb yellow open customer S09RAZu5R0yfA8WgHhX3tA 1 1 4 6 9.1kb 9.1kb

删除“ customer ”索引

删除后,所有的索引

1 2 3 green open .kibana_task_manager_1 X9B74aaIS9KHLlPUrYLVWA 1 0 2 0 34.2kb 34.2kb green open .apm-agent-configuration ZXdJradmQcG-fbLFmRydKw 1 0 0 0 283b 283b green open .kibana_1 9uZjKicuSPqv5qUSMWes3Q 1 0 7 0 34.5kb 34.5kb

6)eleasticsearch的批量操作——bulk 语法格式:

1 2 3 4 5 { action: { metadata} } \n{ request body } \n{ action: { metadata} } \n{ request body } \n

这里的批量操作,当发生某一条执行发生失败时,其他的数据仍然能够接着执行,也就是说彼此之间是独立的。

bulk api以此按顺序执行所有的action(动作)。如果一个单个的动作因任何原因失败,它将继续处理它后面剩余的动作。当bulk api返回时,它将提供每个动作的状态(与发送的顺序相同),所以您可以检查是否一个指定的动作是否失败了。

postman不支持下面的数据格式,所以以下将在Kibana中进行测试

实例1: 执行多条数据

1 2 3 4 5 POST customer/external/_bulk { "index" : { "_id" : "1" } } { "name" : "John Doe" } { "index" : { "_id" : "2" } } { "name" : "John Doe" }

执行结果

实例2:对于整个索引执行批量操作

1 2 3 4 5 6 7 8 POST /_bulk { "delete" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" } } { "create" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" } } { "title" : "my first blog post" } { "index" : { "_index" : "website" , "_type" : "blog" } } { "title" : "my second blog post" } { "update" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" } } { "doc" : { "title" : "my updated blog post" } }

运行结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 #! Deprecation: [ types removal] Specifying types in bulk requests is deprecated. { "took" : 344 , "errors" : false , "items" : [ { "delete" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" , "_version" : 1 , "result" : "not_found" , "_shards" : { "total" : 2 , "successful" : 1 , "failed" : 0 } , "_seq_no" : 0 , "_primary_term" : 1 , "status" : 404 } } , { "create" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" , "_version" : 2 , "result" : "created" , "_shards" : { "total" : 2 , "successful" : 1 , "failed" : 0 } , "_seq_no" : 1 , "_primary_term" : 1 , "status" : 201 } } , { "index" : { "_index" : "website" , "_type" : "blog" , "_id" : "BMv_2H8BWhzCIFNne3Q7" , "_version" : 1 , "result" : "created" , "_shards" : { "total" : 2 , "successful" : 1 , "failed" : 0 } , "_seq_no" : 2 , "_primary_term" : 1 , "status" : 201 } } , { "update" : { "_index" : "website" , "_type" : "blog" , "_id" : "123" , "_version" : 3 , "result" : "updated" , "_shards" : { "total" : 2 , "successful" : 1 , "failed" : 0 } , "_seq_no" : 3 , "_primary_term" : 1 , "status" : 200 } } ] }

7)样本测试数据 准备了一份顾客银行账户信息的虚构的JSON文档样本。每个文档都有下列的schema(模式)。

1 2 3 4 5 6 7 8 9 10 11 12 13 { "account_number" : 1 , "balance" : 39225 , "firstname" : "Amber" , "lastname" : "Duke" , "age" : 32 , "gender" : "M" , "address" : "880 Holmes Lane" , "employer" : "Pyrami" , "email" : "amberduke@pyrami.com" , "city" : "Brogan" , "state" : "IL" }

https://github.com/zsxfa/gulimall/blob/main/es%E7%9A%84%E6%B5%8B%E8%AF%95%E6%95%B0%E6%8D%AE.json ,导入测试数据,

POST bank/account/_bulk

3、检索 1)search Api ES支持两种基本方式检索;

通过REST request uri 发送搜索参数 (uri +检索参数);

通过REST request body 来发送它们(uri+请求体);

信息检索

1 2 3 4 5 6 7 8 GET /bank/_search { "query" : { "match_all" : { } } , "sort" : [ { "account_number" : "asc" } , { "balance" : "desc" } ] }

HTTP客户端工具(POSTMAN),get请求不能够携带请求体,我们变为 post 也是一样的我们POST 一个JSON 风格的查询请求体到_search API。

(1)只有6条数据,这是因为存在分页查询;

使用from和size可以指定查询

1 2 3 4 5 6 7 8 9 10 GET /bank/_search { "query": { "match_all": {} }, "sort": [ { "account_number": "asc" }, {"balance":"desc"} ], "from": 20, "size": 10 }

(2)详细的字段信息,参照: https://www.elastic.co/guide/en/elasticsearch/reference/current/getting-started-search.html

The response also provides the following information about the search request:

took – how long it took Elasticsearch to run the query, in millisecondstimed_out – whether or not the search request timed out_shards – how many shards were searched and a breakdown of how many shards succeeded, failed, or were skipped.max_score – the score of the most relevant document foundhits.total.value - how many matching documents were foundhits.sort - the document’s sort position (when not sorting by relevance score)hits._score - the document’s relevance score (not applicable when using match_all)

2)Query DSL (1)基本语法格式 Elasticsearch提供了一个可以执行查询的Json风格的DSL。这个被称为Query DSL,该查询语言非常全面。

一个查询语句的典型结构

1 2 3 4 QUERY_NAME: { ARGUMENT: VALUE, ARGUMENT: VALUE, ... }

如果针对于某个字段,那么它的结构如下:

1 2 3 4 5 6 7 8 { QUERY_NAME: { FIELD_NAME: { ARGUMENT: VALUE, ARGUMENT: VALUE, ... } } }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 GET bank/_search { "query" : { "match_all" : { } } , "from" : 0 , "size" : 5 , "sort" : [ { "account_number" : { "order" : "desc" } } ] }

query定义如何查询;

match_all查询类型【代表查询所有的所有】,es中可以在query中组合非常多的查询类型完成复杂查询;

除了query参数之外,我们可也传递其他的参数以改变查询结果,如sort,size;

from+size限定,完成分页功能;

sort排序,多字段排序,会在前序字段相等时后续字段内部排序,否则以前序为准;

(2)返回部分字段 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 GET bank/_search { "query" : { "match_all" : { } } , "from" : 0 , "size" : 5 , "sort" : [ { "account_number" : { "order" : "desc" } } ] , "_source" : [ "balance" , "firstname" ] }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 { "took" : 6 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1000 , "relation" : "eq" } , "max_score" : null , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "999" , "_score" : null , "_source" : { "firstname" : "Dorothy" , "balance" : 6087 } , "sort" : [ 999 ] } , { "_index" : "bank" , "_type" : "account" , "_id" : "998" , "_score" : null , "_source" : { "firstname" : "Letha" , "balance" : 16869 } , "sort" : [ 998 ] } , { "_index" : "bank" , "_type" : "account" , "_id" : "997" , "_score" : null , "_source" : { "firstname" : "Combs" , "balance" : 25311 } , "sort" : [ 997 ] } , { "_index" : "bank" , "_type" : "account" , "_id" : "996" , "_score" : null , "_source" : { "firstname" : "Andrews" , "balance" : 17541 } , "sort" : [ 996 ] } , { "_index" : "bank" , "_type" : "account" , "_id" : "995" , "_score" : null , "_source" : { "firstname" : "Phelps" , "balance" : 21153 } , "sort" : [ 995 ] } ] } }

(3)match匹配查询

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match" : { "account_number" : "20" } } }

match返回account_number=20的数据。上面匹配的20也可以不带引号

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 6 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 1.0 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "20" , "_score" : 1.0 , "_source" : { "account_number" : 20 , "balance" : 16418 , "firstname" : "Elinor" , "lastname" : "Ratliff" , "age" : 36 , "gender" : "M" , "address" : "282 Kings Place" , "employer" : "Scentric" , "email" : "elinorratliff@scentric.com" , "city" : "Ribera" , "state" : "WA" } } ] } }

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match" : { "address" : "kings" } } }

全文检索,最终会按照评分进行排序,会对检索条件进行分词匹配。

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 { "took" : 3 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 2 , "relation" : "eq" } , "max_score" : 5.990829 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "20" , "_score" : 5.990829 , "_source" : { "account_number" : 20 , "balance" : 16418 , "firstname" : "Elinor" , "lastname" : "Ratliff" , "age" : 36 , "gender" : "M" , "address" : "282 Kings Place" , "employer" : "Scentric" , "email" : "elinorratliff@scentric.com" , "city" : "Ribera" , "state" : "WA" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "722" , "_score" : 5.990829 , "_source" : { "account_number" : 722 , "balance" : 27256 , "firstname" : "Roberts" , "lastname" : "Beasley" , "age" : 34 , "gender" : "F" , "address" : "305 Kings Hwy" , "employer" : "Quintity" , "email" : "robertsbeasley@quintity.com" , "city" : "Hayden" , "state" : "PA" } } ] } }

(4) match_phrase [短句匹配] 将需要匹配的值当成一整个单词(不分词)进行检索

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match_phrase" : { "address" : "mill road" } } }

查处address中包含mill_road的所有记录,并给出相关性得分

查看结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 0 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 8.926605 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 8.926605 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } ] } }

match_phrase和Match的区别,观察如下实例:

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match_phrase" : { "address" : "990 Mill" } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 0 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 10.806405 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 10.806405 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } ] } }

使用match的keyword

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match" : { "address.keyword" : "990 Mill" } } }

查询结果,一条也未匹配到

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 { "took" : 0 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 0 , "relation" : "eq" } , "max_score" : null , "hits" : [ ] } }

修改匹配条件为“990 Mill Road”

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "match" : { "address.keyword" : "990 Mill Road" } } }

查询出一条数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 6.5032897 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 6.5032897 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } ] } }

文本字段的匹配,使用keyword ,匹配的条件就是要显示字段的全部值,要进行精确匹配的。

match_phrase是做短语匹配,只要文本中包含匹配条件,就能匹配到。

(5)multi_math【多字段匹配】 1 2 3 4 5 6 7 8 9 10 11 12 GET bank/_search { "query" : { "multi_match" : { "query" : "mill" , "fields" : [ "state" , "address" ] } } }

state或者address中包含mill,并且在查询过程中,会对于查询条件进行分词。

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 { "took" : 2 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 4 , "relation" : "eq" } , "max_score" : 5.4032025 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 5.4032025 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "136" , "_score" : 5.4032025 , "_source" : { "account_number" : 136 , "balance" : 45801 , "firstname" : "Winnie" , "lastname" : "Holland" , "age" : 38 , "gender" : "M" , "address" : "198 Mill Lane" , "employer" : "Neteria" , "email" : "winnieholland@neteria.com" , "city" : "Urie" , "state" : "IL" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "345" , "_score" : 5.4032025 , "_source" : { "account_number" : 345 , "balance" : 9812 , "firstname" : "Parker" , "lastname" : "Hines" , "age" : 38 , "gender" : "M" , "address" : "715 Mill Avenue" , "employer" : "Baluba" , "email" : "parkerhines@baluba.com" , "city" : "Blackgum" , "state" : "KY" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "472" , "_score" : 5.4032025 , "_source" : { "account_number" : 472 , "balance" : 25571 , "firstname" : "Lee" , "lastname" : "Long" , "age" : 32 , "gender" : "F" , "address" : "288 Mill Street" , "employer" : "Comverges" , "email" : "leelong@comverges.com" , "city" : "Movico" , "state" : "MT" } } ] } }

(6)bool用来做复合查询 复合语句可以合并,任何其他查询语句,包括符合语句。这也就意味着,复合语句之间

must:必须达到must所列举的所有条件

1 2 3 4 5 6 7 8 9 10 11 GET bank/_search { "query" : { "bool" : { "must" : [ { "match" : { "address" : "mill" } } , { "match" : { "gender" : "M" } } ] } } }

must_not,必须不匹配must_not所列举的所有条件。

should,应该满足should所列举的条件。

实例:查询gender=m,并且address=mill的数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 GET bank/_search { "query" : { "bool" : { "must" : [ { "match" : { "gender" : "M" } } , { "match" : { "address" : "mill" } } ] } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 3 , "relation" : "eq" } , "max_score" : 6.0824604 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 6.0824604 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "136" , "_score" : 6.0824604 , "_source" : { "account_number" : 136 , "balance" : 45801 , "firstname" : "Winnie" , "lastname" : "Holland" , "age" : 38 , "gender" : "M" , "address" : "198 Mill Lane" , "employer" : "Neteria" , "email" : "winnieholland@neteria.com" , "city" : "Urie" , "state" : "IL" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "345" , "_score" : 6.0824604 , "_source" : { "account_number" : 345 , "balance" : 9812 , "firstname" : "Parker" , "lastname" : "Hines" , "age" : 38 , "gender" : "M" , "address" : "715 Mill Avenue" , "employer" : "Baluba" , "email" : "parkerhines@baluba.com" , "city" : "Blackgum" , "state" : "KY" } } ] } }

must_not:必须不是指定的情况

实例:查询gender=m,并且address=mill的数据,但是age不等于38的

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 GET bank/_search { "query" : { "bool" : { "must" : [ { "match" : { "address" : "mill" } } , { "match" : { "gender" : "M" } } ] , "must_not" : [ { "match" : { "age" : "38" } } ] } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 6.0824604 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 6.0824604 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } ] } }

should:应该达到should列举的条件,如果到达会增加相关文档的评分,并不会改变查询的结果。如果query中只有should且只有一种匹配规则,那么should的条件就会被作为默认匹配条件二区改变查询结果。

实例:匹配lastName应该等于Wallace的数据

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 GET bank/_search { "query" : { "bool" : { "must" : [ { "match" : { "address" : "mill" } } , { "match" : { "gender" : "M" } } ] , "must_not" : [ { "match" : { "age" : "18" } } ] , "should" : [ { "match" : { "lastname" : "Wallace" } } ] } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 3 , "relation" : "eq" } , "max_score" : 12.585751 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 12.585751 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "136" , "_score" : 6.0824604 , "_source" : { "account_number" : 136 , "balance" : 45801 , "firstname" : "Winnie" , "lastname" : "Holland" , "age" : 38 , "gender" : "M" , "address" : "198 Mill Lane" , "employer" : "Neteria" , "email" : "winnieholland@neteria.com" , "city" : "Urie" , "state" : "IL" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "345" , "_score" : 6.0824604 , "_source" : { "account_number" : 345 , "balance" : 9812 , "firstname" : "Parker" , "lastname" : "Hines" , "age" : 38 , "gender" : "M" , "address" : "715 Mill Avenue" , "employer" : "Baluba" , "email" : "parkerhines@baluba.com" , "city" : "Blackgum" , "state" : "KY" } } ] } }

能够看到相关度越高,得分也越高。

(7)Filter【结果过滤】 并不是所有的查询都需要产生分数,特别是哪些仅用于filtering过滤的文档。为了不计算分数,elasticsearch会自动检查场景并且优化查询的执行。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 GET bank/_search { "query" : { "bool" : { "must" : [ { "match" : { "address" : "mill" } } ] , "filter" : { "range" : { "balance" : { "gte" : "10000" , "lte" : "20000" } } } } } }

这里先是查询所有匹配address=mill的文档,然后再根据10000<=balance<=20000进行过滤查询结果

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1 , "relation" : "eq" } , "max_score" : 5.4032025 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "970" , "_score" : 5.4032025 , "_source" : { "account_number" : 970 , "balance" : 19648 , "firstname" : "Forbes" , "lastname" : "Wallace" , "age" : 28 , "gender" : "M" , "address" : "990 Mill Road" , "employer" : "Pheast" , "email" : "forbeswallace@pheast.com" , "city" : "Lopezo" , "state" : "AK" } } ] } }

Each must, should, and must_not element in a Boolean query is referred to as a query clause. How well a document meets the criteria in each must or should clause contributes to the document’s relevance score . The higher the score, the better the document matches your search criteria. By default, Elasticsearch returns documents ranked by these relevance scores.

在boolean查询中,must, should 和must_not 元素都被称为查询子句 。 文档是否符合每个“must”或“should”子句中的标准,决定了文档的“相关性得分”。 得分越高,文档越符合您的搜索条件。 默认情况下,Elasticsearch返回根据这些相关性得分排序的文档。

The criteria in a must_not clause is treated as a filter . It affects whether or not the document is included in the results, but does not contribute to how documents are scored. You can also explicitly specify arbitrary filters to include or exclude documents based on structured data.

“must_not”子句中的条件被视为“过滤器”。 它影响文档是否包含在结果中, 但不影响文档的评分方式。 还可以显式地指定任意过滤器来包含或排除基于结构化数据的文档。

filter在使用过程中,并不会计算相关性得分:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 GET bank/_search { "query" : { "bool" : { "filter" : { "range" : { "balance" : { "gte" : "10000" , "lte" : "20000" } } } } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 213 , "relation" : "eq" } , "max_score" : 0.0 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "20" , "_score" : 0.0 , "_source" : { "account_number" : 20 , "balance" : 16418 , "firstname" : "Elinor" , "lastname" : "Ratliff" , "age" : 36 , "gender" : "M" , "address" : "282 Kings Place" , "employer" : "Scentric" , "email" : "elinorratliff@scentric.com" , "city" : "Ribera" , "state" : "WA" } } , { "_index" : "bank" , "_type" : "account" , "_id" : "37" , "_score" : 0.0 , "_source" : { "account_number" : 37 , "balance" : 18612 , "firstname" : "Mcgee" , "lastname" : "Mooney" , "age" : 39 , "gender" : "M" , "address" : "826 Fillmore Place" , "employer" : "Reversus" , "email" : "mcgeemooney@reversus.com" , "city" : "Tooleville" , "state" : "OK" } } , ......

能看到所有文档的 “_score” : 0.0。

(8)term 和match一样。匹配某个属性的值。全文检索字段用match,其他非text字段匹配用term。

Avoid using the term query for text

避免对文本字段使用“term”查询

By default, Elasticsearch changes the values of text fields as part of analysis . This can make finding exact matches for text field values difficult.

默认情况下,Elasticsearch作为analysis 的一部分更改’ text ‘字段的值。这使得为“text”字段值寻找精确匹配变得困难。

To search text field values, use the match.

要搜索“text”字段值,请使用匹配。

https://www.elastic.co/guide/en/elasticsearch/reference/7.6/query-dsl-term-query.html

使用term匹配查询

1 2 3 4 5 6 7 8 GET bank/_search { "query" : { "term" : { "address" : "mill Road" } } }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 { "took" : 0 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 0 , "relation" : "eq" } , "max_score" : null , "hits" : [ ] } }

一条也没有匹配到

而更换为match匹配时,能够匹配到32个文档

也就是说,全文检索字段用match,其他非text字段匹配用term 。

(9)Aggregation(执行聚合) 聚合提供了从数据中分组和提取数据的能力。最简单的聚合方法大致等于SQL Group by和SQL聚合函数。在elasticsearch中,执行搜索返回this(命中结果),并且同时返回聚合结果,把以响应中的所有hits(命中结果)分隔开的能力。这是非常强大且有效的,你可以执行查询和多个聚合,并且在一次使用中得到各自的(任何一个的)返回结果,使用一次简洁和简化的API啦避免网络往返。

“size”:0

size:0不显示搜索数据

1 2 3 4 5 "aggs" : { "aggs_name这次聚合的名字,方便展示在结果集中" : { "AGG_TYPE聚合的类型(avg,term,terms)" : { } } } ,

搜索address中包含mill的所有人的年龄分布以及平均年龄,但不显示这些人的详情

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 GET bank/_search { "query" : { "match" : { "address" : "Mill" } } , "aggs" : { "ageAgg" : { "terms" : { "field" : "age" , "size" : 10 #假设年龄有100 种可能,只取出10 个 } } , "ageAvg" : { "avg" : { "field" : "age" } } , "balanceAvg" : { "avg" : { "field" : "balance" } } } , "size" : 0 }

查询结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 4 , "relation" : "eq" } , "max_score" : null , "hits" : [ ] } , "aggregations" : { "ageAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : 38 , "doc_count" : 2 } , { "key" : 28 , "doc_count" : 1 } , { "key" : 32 , "doc_count" : 1 } ] } , "ageAvg" : { "value" : 34.0 } , "balanceAvg" : { "value" : 25208.0 } } }

复杂:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 GET bank/_search { "query" : { "match_all" : { } } , "aggs" : { "ageAgg" : { "terms" : { "field" : "age" , "size" : 100 } , "aggs" : { "ageAvg" : { "avg" : { "field" : "balance" } } } } } , "size" : 0 }

输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1000 , "relation" : "eq" } , "max_score" : null , "hits" : [ ] } , "aggregations" : { "ageAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : 31 , "doc_count" : 61 , "ageAvg" : { "value" : 28312.918032786885 } } , { "key" : 39 , "doc_count" : 60 , "ageAvg" : { "value" : 25269.583333333332 } } , { "key" : 26 , "doc_count" : 59 , "ageAvg" : { "value" : 23194.813559322032 } } , { "key" : 32 , "doc_count" : 52 , "ageAvg" : { "value" : 23951.346153846152 } } , { "key" : 35 , "doc_count" : 52 , "ageAvg" : { "value" : 22136.69230769231 } } , { "key" : 36 , "doc_count" : 52 , "ageAvg" : { "value" : 22174.71153846154 } } , { "key" : 22 , "doc_count" : 51 , "ageAvg" : { "value" : 24731.07843137255 } } , { "key" : 28 , "doc_count" : 51 , "ageAvg" : { "value" : 28273.882352941175 } } , { "key" : 33 , "doc_count" : 50 , "ageAvg" : { "value" : 25093.94 } } , { "key" : 34 , "doc_count" : 49 , "ageAvg" : { "value" : 26809.95918367347 } } , { "key" : 30 , "doc_count" : 47 , "ageAvg" : { "value" : 22841.106382978724 } } , { "key" : 21 , "doc_count" : 46 , "ageAvg" : { "value" : 26981.434782608696 } } , { "key" : 40 , "doc_count" : 45 , "ageAvg" : { "value" : 27183.17777777778 } } , { "key" : 20 , "doc_count" : 44 , "ageAvg" : { "value" : 27741.227272727272 } } , { "key" : 23 , "doc_count" : 42 , "ageAvg" : { "value" : 27314.214285714286 } } , { "key" : 24 , "doc_count" : 42 , "ageAvg" : { "value" : 28519.04761904762 } } , { "key" : 25 , "doc_count" : 42 , "ageAvg" : { "value" : 27445.214285714286 } } , { "key" : 37 , "doc_count" : 42 , "ageAvg" : { "value" : 27022.261904761905 } } , { "key" : 27 , "doc_count" : 39 , "ageAvg" : { "value" : 21471.871794871793 } } , { "key" : 38 , "doc_count" : 39 , "ageAvg" : { "value" : 26187.17948717949 } } , { "key" : 29 , "doc_count" : 35 , "ageAvg" : { "value" : 29483.14285714286 } } ] } } }

查出所有年龄分布,并且这些年龄段中M的平均薪资和F的平均薪资以及这个年龄段的总体平均薪资

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 GET bank/_search { "query" : { "match_all" : { } } , "aggs" : { "ageAgg" : { "terms" : { "field" : "age" , "size" : 100 } , "aggs" : { "genderAgg" : { "terms" : { "field" : "gender.keyword" } , "aggs" : { "balanceAvg" : { "avg" : { "field" : "balance" } } } } , "ageBalanceAvg" : { "avg" : { "field" : "balance" } } } } } , "size" : 0 }

输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1000 , "relation" : "eq" } , "max_score" : null , "hits" : [ ] } , "aggregations" : { "ageAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : 31 , "doc_count" : 61 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 35 , "balanceAvg" : { "value" : 29565.628571428573 } } , { "key" : "F" , "doc_count" : 26 , "balanceAvg" : { "value" : 26626.576923076922 } } ] } , "ageBalanceAvg" : { "value" : 28312.918032786885 } } , { "key" : 39 , "doc_count" : 60 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 38 , "balanceAvg" : { "value" : 26348.684210526317 } } , { "key" : "M" , "doc_count" : 22 , "balanceAvg" : { "value" : 23405.68181818182 } } ] } , "ageBalanceAvg" : { "value" : 25269.583333333332 } } , { "key" : 26 , "doc_count" : 59 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 32 , "balanceAvg" : { "value" : 25094.78125 } } , { "key" : "F" , "doc_count" : 27 , "balanceAvg" : { "value" : 20943.0 } } ] } , "ageBalanceAvg" : { "value" : 23194.813559322032 } } , { "key" : 32 , "doc_count" : 52 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 28 , "balanceAvg" : { "value" : 22941.964285714286 } } , { "key" : "F" , "doc_count" : 24 , "balanceAvg" : { "value" : 25128.958333333332 } } ] } , "ageBalanceAvg" : { "value" : 23951.346153846152 } } , { "key" : 35 , "doc_count" : 52 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 28 , "balanceAvg" : { "value" : 24226.321428571428 } } , { "key" : "F" , "doc_count" : 24 , "balanceAvg" : { "value" : 19698.791666666668 } } ] } , "ageBalanceAvg" : { "value" : 22136.69230769231 } } , { "key" : 36 , "doc_count" : 52 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 31 , "balanceAvg" : { "value" : 20884.677419354837 } } , { "key" : "F" , "doc_count" : 21 , "balanceAvg" : { "value" : 24079.04761904762 } } ] } , "ageBalanceAvg" : { "value" : 22174.71153846154 } } , { "key" : 22 , "doc_count" : 51 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 27 , "balanceAvg" : { "value" : 22152.74074074074 } } , { "key" : "M" , "doc_count" : 24 , "balanceAvg" : { "value" : 27631.708333333332 } } ] } , "ageBalanceAvg" : { "value" : 24731.07843137255 } } , { "key" : 28 , "doc_count" : 51 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 31 , "balanceAvg" : { "value" : 27076.8064516129 } } , { "key" : "M" , "doc_count" : 20 , "balanceAvg" : { "value" : 30129.35 } } ] } , "ageBalanceAvg" : { "value" : 28273.882352941175 } } , { "key" : 33 , "doc_count" : 50 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 26 , "balanceAvg" : { "value" : 26437.615384615383 } } , { "key" : "M" , "doc_count" : 24 , "balanceAvg" : { "value" : 23638.291666666668 } } ] } , "ageBalanceAvg" : { "value" : 25093.94 } } , { "key" : 34 , "doc_count" : 49 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 30 , "balanceAvg" : { "value" : 26039.166666666668 } } , { "key" : "M" , "doc_count" : 19 , "balanceAvg" : { "value" : 28027.0 } } ] } , "ageBalanceAvg" : { "value" : 26809.95918367347 } } , { "key" : 30 , "doc_count" : 47 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 25 , "balanceAvg" : { "value" : 25316.16 } } , { "key" : "M" , "doc_count" : 22 , "balanceAvg" : { "value" : 20028.545454545456 } } ] } , "ageBalanceAvg" : { "value" : 22841.106382978724 } } , { "key" : 21 , "doc_count" : 46 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 24 , "balanceAvg" : { "value" : 28210.916666666668 } } , { "key" : "M" , "doc_count" : 22 , "balanceAvg" : { "value" : 25640.18181818182 } } ] } , "ageBalanceAvg" : { "value" : 26981.434782608696 } } , { "key" : 40 , "doc_count" : 45 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 24 , "balanceAvg" : { "value" : 26474.958333333332 } } , { "key" : "F" , "doc_count" : 21 , "balanceAvg" : { "value" : 27992.571428571428 } } ] } , "ageBalanceAvg" : { "value" : 27183.17777777778 } } , { "key" : 20 , "doc_count" : 44 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 27 , "balanceAvg" : { "value" : 29047.444444444445 } } , { "key" : "F" , "doc_count" : 17 , "balanceAvg" : { "value" : 25666.647058823528 } } ] } , "ageBalanceAvg" : { "value" : 27741.227272727272 } } , { "key" : 23 , "doc_count" : 42 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 24 , "balanceAvg" : { "value" : 27730.75 } } , { "key" : "F" , "doc_count" : 18 , "balanceAvg" : { "value" : 26758.833333333332 } } ] } , "ageBalanceAvg" : { "value" : 27314.214285714286 } } , { "key" : 24 , "doc_count" : 42 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 23 , "balanceAvg" : { "value" : 29414.521739130436 } } , { "key" : "M" , "doc_count" : 19 , "balanceAvg" : { "value" : 27435.052631578947 } } ] } , "ageBalanceAvg" : { "value" : 28519.04761904762 } } , { "key" : 25 , "doc_count" : 42 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 23 , "balanceAvg" : { "value" : 29336.08695652174 } } , { "key" : "F" , "doc_count" : 19 , "balanceAvg" : { "value" : 25156.263157894737 } } ] } , "ageBalanceAvg" : { "value" : 27445.214285714286 } } , { "key" : 37 , "doc_count" : 42 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 23 , "balanceAvg" : { "value" : 25015.739130434784 } } , { "key" : "F" , "doc_count" : 19 , "balanceAvg" : { "value" : 29451.21052631579 } } ] } , "ageBalanceAvg" : { "value" : 27022.261904761905 } } , { "key" : 27 , "doc_count" : 39 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 21 , "balanceAvg" : { "value" : 21618.85714285714 } } , { "key" : "M" , "doc_count" : 18 , "balanceAvg" : { "value" : 21300.38888888889 } } ] } , "ageBalanceAvg" : { "value" : 21471.871794871793 } } , { "key" : 38 , "doc_count" : 39 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "F" , "doc_count" : 20 , "balanceAvg" : { "value" : 27931.65 } } , { "key" : "M" , "doc_count" : 19 , "balanceAvg" : { "value" : 24350.894736842107 } } ] } , "ageBalanceAvg" : { "value" : 26187.17948717949 } } , { "key" : 29 , "doc_count" : 35 , "genderAgg" : { "doc_count_error_upper_bound" : 0 , "sum_other_doc_count" : 0 , "buckets" : [ { "key" : "M" , "doc_count" : 23 , "balanceAvg" : { "value" : 29943.17391304348 } } , { "key" : "F" , "doc_count" : 12 , "balanceAvg" : { "value" : 28601.416666666668 } } ] } , "ageBalanceAvg" : { "value" : 29483.14285714286 } } ] } } }

3)Mapping (1)字段类型 核心类型:

字符串(string): text,keyword

数字类型(Numeric):long,integer,short,byte,double,float,half_float,scaled_float

日期类型(Date): date

布尔类型(Boolean): boolean

二进制类型(binary): binary

复合类型:

数组类型(Array): Array支持不针对特定的类型

对象类型(Object): object用于单JSON对象

嵌套类型(Nested): nested用户JSON对象数组

地理类型(Geo)

地理坐标(Geo-points): geo_point用于描述 经纬度坐标

地理图形(Geo-Shape): geo_shape用户描述复杂形状,如多边形

特定类型:

IP类型:ip用于描述ipv4和ipv6地址

补全类型(Completion):completion提供自动完成提示

令牌计数类型(Token count):token_count用于统计字符串种的词条数量

附件类型(attachment):参考mapper-attachements插件,支持将附件如Microsoft Office格式,Open Document格式,ePub,HTML等等索引为attachment数据类型。

抽取类型(Percolator):接受特定领域查询语言(query-dsl)的查询

多字段:

通常用于为不同的方法索引同一个字段。例如,string字段可以映射为一个text字段用于全文检索,同样可以映射为一个keyword字段用于排序和聚合。另外,你可以使用standard analyzer,english analyzer, french analyzer来索引一个text字段

这就是muti-fields的目的,大多数的数据类型通过fields参数来支持muti-fields。

(2)映射 Mapping(映射)

(3)新版本改变 ElasticSearch7-去掉type概念

关系型数据库中两个数据表示是独立的,即使他们里面有相同名称的列也不影响使用,但ES中不是这样的。elasticsearch是基于Lucene开发的搜索引擎,而ES中不同type下名称相同的filed最终在Lucene中的处理方式是一样的。

两个不同type下的两个user_name,在ES同一个索引下其实被认为是同一个filed,你必须在两个不同的type中定义相同的filed映射。否则,不同type中的相同字段名称就会在处理中出现冲突的情况,导致Lucene处理效率下降。

去掉type就是为了提高ES处理数据的效率。

Elasticsearch 7.x URL中的type参数为可选。比如,索引一个文档不再要求提供文档类型。

Elasticsearch 8.x 不再支持URL中的type参数。

解决:

将已存在的索引下的类型数据,全部迁移到指定位置即可。详见数据迁移

Elasticsearch 7.x

Specifying types in requests is deprecated. For instance, indexing a document no longer requires a document type. The new index APIs are PUT {index}/_doc/{id} in case of explicit ids and POST {index}/_doc for auto-generated ids. Note that in 7.0, _doc is a permanent part of the path, and represents the endpoint name rather than the document type.

The include_type_name parameter in the index creation, index template, and mapping APIs will default to false. Setting the parameter at all will result in a deprecation warning.

The _default_ mapping type is removed.

Elasticsearch 8.x

Specifying types in requests is no longer supported.

The include_type_name parameter is removed.

创建映射 创建索引并指定映射

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 PUT /my_index { "mappings" : { "properties" : { "age" : { "type" : "integer" } , "email" : { "type" : "keyword" } , "name" : { "type" : "text" } } } }

输出:

1 2 3 4 5 6 { "acknowledged" : true , "shards_acknowledged" : true , "index" : "my_index" }

查看映射 输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 { "my_index" : { "aliases" : { } , "mappings" : { "properties" : { "age" : { "type" : "integer" } , "email" : { "type" : "keyword" } , "name" : { "type" : "text" } } } , "settings" : { "index" : { "creation_date" : "1648691594218" , "number_of_shards" : "1" , "number_of_replicas" : "1" , "uuid" : "2gowX9taSjmvYBz-OaDILQ" , "version" : { "created" : "7060299" } , "provided_name" : "my_index" } } } }

添加新的字段映射 1 2 3 4 5 6 7 8 9 PUT /my_index/_mapping { "properties" : { "employee-id" : { "type" : "keyword" , "index" : false } } }

这里的 “index”: false,表明新增的字段不能被检索,只是一个冗余字段。

更新映射 对于已经存在的字段映射,我们不能更新。更新必须创建新的索引,进行数据迁移。

数据迁移 先创建new_twitter的正确映射。然后使用如下方式进行数据迁移。

1 2 3 4 5 6 7 8 9 POST _reindex [ 固定写法] { "source" : { "index" : "twitter" } , "dest" : { "index" : "new_twitters" } }

将旧索引的type下的数据进行迁移

1 2 3 4 5 6 7 8 9 10 POST _reindex [ 固定写法] { "source" : { "index" : "twitter" , "twitter" : "twitter" } , "dest" : { "index" : "new_twitters" } }

更多详情见: https://www.elastic.co/guide/en/elasticsearch/reference/7.6/docs-reindex.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 GET /bank/_search { "took" : 0 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 1000 , "relation" : "eq" } , "max_score" : 1.0 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "1" , "_score" : 1.0 , "_source" : { "account_number" : 1 , "balance" : 39225 , "firstname" : "Amber" , "lastname" : "Duke" , "age" : 32 , "gender" : "M" , "address" : "880 Holmes Lane" , "employer" : "Pyrami" , "email" : "amberduke@pyrami.com" , "city" : "Brogan" , "state" : "IL" } } , ...

想要将年龄修改为integer

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 PUT /newbank { "mappings" : { "properties" : { "account_number" : { "type" : "long" } , "address" : { "type" : "text" } , "age" : { "type" : "integer" } , "balance" : { "type" : "long" } , "city" : { "type" : "keyword" } , "email" : { "type" : "keyword" } , "employer" : { "type" : "keyword" } , "firstname" : { "type" : "text" } , "gender" : { "type" : "keyword" } , "lastname" : { "type" : "text" , "fields" : { "keyword" : { "type" : "keyword" , "ignore_above" : 256 } } } , "state" : { "type" : "keyword" } } } }

查看“newbank”的映射:

能够看到age的映射类型被修改为了integer.

将bank中的数据迁移到newbank中

1 2 3 4 5 6 7 8 9 10 POST _reindex { "source" : { "index" : "bank" , "type" : "account" } , "dest" : { "index" : "newbank" } }

运行输出:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 #! Deprecation: [ types removal] Specifying types in reindex requests is deprecated. { "took" : 768 , "timed_out" : false , "total" : 1000 , "updated" : 0 , "created" : 1000 , "deleted" : 0 , "batches" : 1 , "version_conflicts" : 0 , "noops" : 0 , "retries" : { "bulk" : 0 , "search" : 0 } , "throttled_millis" : 0 , "requests_per_second" : -1.0 , "throttled_until_millis" : 0 , "failures" : [ ] }

查看newbank中的数据

4)分词 一个tokenizer(分词器)接收一个字符流,将之分割为独立的tokens(词元,通常是独立的单词),然后输出tokens流。

例如:whitespace tokenizer遇到空白字符时分割文本。它会将文本“Quick brown fox!”分割为[Quick,brown,fox!]。

该tokenizer(分词器)还负责记录各个terms(词条)的顺序或position位置(用于phrase短语和word proximity词近邻查询),以及term(词条)所代表的原始word(单词)的start(起始)和end(结束)的character offsets(字符串偏移量)(用于高亮显示搜索的内容)。

elasticsearch提供了很多内置的分词器,可以用来构建custom analyzers(自定义分词器)。

关于分词器: https://www.elastic.co/guide/en/elasticsearch/reference/7.6/analysis.html

1 2 3 4 5 POST _analyze { "analyzer" : "standard" , "text" : "The 2 QUICK Brown-Foxes jumped over the lazy dog's bone." }

执行结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 { "tokens" : [ { "token" : "the" , "start_offset" : 0 , "end_offset" : 3 , "type" : "<ALPHANUM>" , "position" : 0 } , { "token" : "2" , "start_offset" : 4 , "end_offset" : 5 , "type" : "<NUM>" , "position" : 1 } , { "token" : "quick" , "start_offset" : 6 , "end_offset" : 11 , "type" : "<ALPHANUM>" , "position" : 2 } , { "token" : "brown" , "start_offset" : 12 , "end_offset" : 17 , "type" : "<ALPHANUM>" , "position" : 3 } , { "token" : "foxes" , "start_offset" : 18 , "end_offset" : 23 , "type" : "<ALPHANUM>" , "position" : 4 } , { "token" : "jumped" , "start_offset" : 24 , "end_offset" : 30 , "type" : "<ALPHANUM>" , "position" : 5 } , { "token" : "over" , "start_offset" : 31 , "end_offset" : 35 , "type" : "<ALPHANUM>" , "position" : 6 } , { "token" : "the" , "start_offset" : 36 , "end_offset" : 39 , "type" : "<ALPHANUM>" , "position" : 7 } , { "token" : "lazy" , "start_offset" : 40 , "end_offset" : 44 , "type" : "<ALPHANUM>" , "position" : 8 } , { "token" : "dog's" , "start_offset" : 45 , "end_offset" : 50 , "type" : "<ALPHANUM>" , "position" : 9 } , { "token" : "bone" , "start_offset" : 51 , "end_offset" : 55 , "type" : "<ALPHANUM>" , "position" : 10 } ] }

1)安装ik分词器

所有的语言分词,默认使用的都是“Standard Analyzer”,但是这些分词器针对于中文的分词,并不友好。为此需要安装中文的分词器。

注意:不能用默认elasticsearch-plugin install xxx.zip 进行自动安装https://github.com/medcl/elasticsearch-analysis-ik/releases 对应es版本安装

在前面安装的elasticsearch时,我们已经将elasticsearch容器的“/usr/share/elasticsearch/plugins”目录,映射到宿主机的“ /mydata/elasticsearch/plugins”目录下,所以比较方便的做法就是下载“/elasticsearch-analysis-ik-7.6.2.zip”文件,然后解压到该文件夹下即可。安装完毕后,需要重启elasticsearch容器。

如果不嫌麻烦,还可以采用如下的方式。

a、查看elasticsearch版本号: 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@hadoop-104 ~]# curl http://localhost:9200 { "name" : "0adeb7852e00", "cluster_name" : "elasticsearch", "cluster_uuid" : "9gglpP0HTfyOTRAaSe2rIg", "version" : { "number" : "7.6.2", #版本号为7.6.2 "build_flavor" : "default", "build_type" : "docker", "build_hash" : "ef48eb35cf30adf4db14086e8aabd07ef6fb113f", "build_date" : "2020-03-26T06:34:37.794943Z", "build_snapshot" : false, "lucene_version" : "8.4.0", "minimum_wire_compatibility_version" : "6.8.0", "minimum_index_compatibility_version" : "6.0.0-beta1" }, "tagline" : "You Know, for Search" } [root@hadoop-104 ~]#

b、进入es容器内部plugin目录

docker exec -it 容器id /bin/bash

1 2 [root@hadoop-104 ~]# docker exec -it elasticsearch /bin/bash [root@0adeb7852e00 elasticsearch]#

1 2 3 4 [root@0adeb7852e00 elasticsearch]# pwd /usr/share/elasticsearch # 下载ik7.6.2 [root@0adeb7852e00 elasticsearch]# wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.6.2/elasticsearch-analysis-ik-7.6.2.zip

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@0adeb7852e00 elasticsearch]# unzip elasticsearch-analysis-ik-7.6.2.zip -d ik Archive: elasticsearch-analysis-ik-7.6.2.zip creating: ik/config/ inflating: ik/config/main.dic inflating: ik/config/quantifier.dic inflating: ik/config/extra_single_word_full.dic inflating: ik/config/IKAnalyzer.cfg.xml inflating: ik/config/surname.dic inflating: ik/config/suffix.dic inflating: ik/config/stopword.dic inflating: ik/config/extra_main.dic inflating: ik/config/extra_stopword.dic inflating: ik/config/preposition.dic inflating: ik/config/extra_single_word_low_freq.dic inflating: ik/config/extra_single_word.dic inflating: ik/elasticsearch-analysis-ik-7.6.2.jar inflating: ik/httpclient-4.5.2.jar inflating: ik/httpcore-4.4.4.jar inflating: ik/commons-logging-1.2.jar inflating: ik/commons-codec-1.9.jar inflating: ik/plugin-descriptor.properties inflating: ik/plugin-security.policy [root@0adeb7852e00 elasticsearch]# chmod -R 777 ik/ # 移动到plugins目录下 [root@0adeb7852e00 elasticsearch]# mv ik plugins/

1 [root@0adeb7852e00 elasticsearch]# rm -rf elasticsearch-analysis-ik-7.6.2.zip

确认是否安装好了分词器,进入到bin目录中执行

1 elasticsearch-plugin list

2)测试分词器 使用默认

1 2 3 4 GET my_index/_analyze { "text" : "我是中国人" }

请观察执行结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 { "tokens" : [ { "token" : "我" , "start_offset" : 0 , "end_offset" : 1 , "type" : "<IDEOGRAPHIC>" , "position" : 0 } , { "token" : "是" , "start_offset" : 1 , "end_offset" : 2 , "type" : "<IDEOGRAPHIC>" , "position" : 1 } , { "token" : "中" , "start_offset" : 2 , "end_offset" : 3 , "type" : "<IDEOGRAPHIC>" , "position" : 2 } , { "token" : "国" , "start_offset" : 3 , "end_offset" : 4 , "type" : "<IDEOGRAPHIC>" , "position" : 3 } , { "token" : "人" , "start_offset" : 4 , "end_offset" : 5 , "type" : "<IDEOGRAPHIC>" , "position" : 4 } ] }

使用ik_smart分词器

1 2 3 4 5 GET my_index/_analyze { "analyzer" : "ik_smart" , "text" : "我是中国人" }

输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 { "tokens" : [ { "token" : "我" , "start_offset" : 0 , "end_offset" : 1 , "type" : "CN_CHAR" , "position" : 0 } , { "token" : "是" , "start_offset" : 1 , "end_offset" : 2 , "type" : "CN_CHAR" , "position" : 1 } , { "token" : "中国人" , "start_offset" : 2 , "end_offset" : 5 , "type" : "CN_WORD" , "position" : 2 } ] }

使用ik_max_word分词器

1 2 3 4 5 GET my_index/_analyze { "analyzer" : "ik_max_word" , "text" : "我是中国人" }

输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 { "tokens" : [ { "token" : "我" , "start_offset" : 0 , "end_offset" : 1 , "type" : "CN_CHAR" , "position" : 0 } , { "token" : "是" , "start_offset" : 1 , "end_offset" : 2 , "type" : "CN_CHAR" , "position" : 1 } , { "token" : "中国人" , "start_offset" : 2 , "end_offset" : 5 , "type" : "CN_WORD" , "position" : 2 } , { "token" : "中国" , "start_offset" : 2 , "end_offset" : 4 , "type" : "CN_WORD" , "position" : 3 } , { "token" : "国人" , "start_offset" : 3 , "end_offset" : 5 , "type" : "CN_WORD" , "position" : 4 } ] }

3)对ES进行设置 由于之前为Linux分配的内存太小了,所以首先需要对虚拟机内存进行配置,

然后需要将ES的docker镜像删除掉重新设置一个新的

1 2 3 4 5 6 7 8 9 10 11 [root@localhost ~]# docker ps 1e3900cda632 elasticsearch:7.6.2 "/usr/local/bin/dock…" ... [root@localhost ~]# docker stop 1e3 [root@localhost ~]# docker rm 1e3 [root@localhost ~]#docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \ -e "discovery.type=single-node" \ -e ES_JAVA_OPTS="-Xms64m -Xmx512m" \ -v /mydata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \ -v /mydata/elasticsearch/data:/usr/share/elasticsearch/data \ -v /mydata/elasticsearch/plugins:/usr/share/elasticsearch/plugins \ -d elasticsearch:7.6.2

4)自定义词库 首先看第5部分附录的安装Nginx部分

修改/mydata/elasticsearch/plugins/ik/config中的IKAnalyzer.cfg.xml

1 2 3 4 5 6 7 8 9 10 11 12 13 <?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd" > <properties > <comment > IK Analyzer 扩展配置</comment > <entry key ="ext_dict" > </entry > <entry key ="ext_stopwords" > </entry > <entry key ="remote_ext_dict" > http://#/es/fenci.txt</entry > </properties >

原来的xml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 <?xml version="1.0" encoding="UTF-8" ?> <!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd" > <properties > <comment > IK Analyzer 扩展配置</comment > <entry key ="ext_dict" > </entry > <entry key ="ext_stopwords" > </entry > </properties >

修改完成后,需要重启elasticsearch容器,否则修改不生效。

1 [root@localhost config]# docker restart elasticsearch

更新完成后,es只会对于新增的数据用更新分词。历史数据是不会重新分词的。如果想要历史数据重新分词,需要执行:

1 POST my_index/_update_by_query?conflicts=proceed

http://#/es/fenci.txt,这个是nginx上资源的访问路径

在运行下面实例之前,需要安装nginx(安装方法见安装nginx),然后创建“fenci.txt”文件,内容如下:

1 echo "乔碧萝" > /mydata/nginx/html/fenci.txt

测试效果:

1 2 3 4 5 GET my_index/_analyze { "analyzer" : "ik_max_word" , "text" : "乔碧萝殿下" }

输出结果:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 { "tokens" : [ { "token" : "乔碧萝" , "start_offset" : 0 , "end_offset" : 3 , "type" : "CN_WORD" , "position" : 0 } , { "token" : "殿下" , "start_offset" : 3 , "end_offset" : 5 , "type" : "CN_WORD" , "position" : 1 } ] }

4、elasticsearch-Rest-Client 1)9300: TCP

spring-data-elasticsearch:transport-api.jar;

springboot版本不同,ransport-api.jar不同,不能适配es版本

7.x已经不建议使用,8以后就要废弃

2)9200: HTTP

5、附录:安装Nginx

随便启动一个nginx实例,只是为了复制出配置

1 [root@localhost mydata]# docker run -p80:80 --name nginx -d nginx:1.10

将容器内的配置文件拷贝到/mydata/nginx/conf/ 下

1 2 3 4 5 6 [root@localhost mydata]# docker container cp nginx:/etc/nginx . # 由于拷贝完成后文件会存在nginx文件夹,这里将nginx文件夹的名字改为conf [root@localhost mydata]# mv nginx conf # 再次创建一个nginx文件夹 [root@localhost mydata]# mkdir nginx [root@localhost mydata]# mv conf nginx/

终止原容器:

执行命令删除原容器:

创建新的Nginx,执行以下命令

1 2 3 4 5 docker run -p 80:80 --name nginx \ -v /mydata/nginx/html:/usr/share/nginx/html \ -v /mydata/nginx/logs:/var/log/nginx \ -v /mydata/nginx/conf/:/etc/nginx \ -d nginx:1.10

设置开机启动nginx

1 docker update nginx --restart=always

创建“/mydata/nginx/html/index.html”文件,测试是否能够正常访问

1 echo '<h2>hello nginx!</h2>' >index .html

访问:http://ngix所在主机的IP:80/index.html

SpringBoot整合ElasticSearch 1、导入依赖 这里的版本要和所按照的ELK版本匹配。

1 2 3 4 5 <dependency > <groupId > org.elasticsearch.client</groupId > <artifactId > elasticsearch-rest-high-level-client</artifactId > <version > 7.6.2</version > </dependency >

在spring-boot-dependencies中所依赖的ELK版本位6.8.7

1 <elasticsearch.version> 6 .8 .7 </elasticsearch.version>

需要在项目中将它改为7.6.2

1 2 3 4 <properties > ... <elasticsearch.version > 7.6.2</elasticsearch.version > </properties >

2、编写测试类 1)测试保存数据 https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high-document-index.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 @Test public void indexData () throws IOException { IndexRequest indexRequest = new IndexRequest ("users" ); User user = new User (); user.setUserName("张三" ); user.setAge(20 ); user.setGender("男" ); String jsonString = JSON.toJSONString(user); indexRequest.source(jsonString, XContentType.JSON); IndexResponse index = client.index(indexRequest, GulimallElasticSearchConfig.COMMON_OPTIONS); System.out.println(index); }

测试后:

2)测试获取数据 https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high-search.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 @Test public void searchData () throws IOException { GetRequest getRequest = new GetRequest ( "users" , "_-2vAHIB0nzmLJLkxKWk" ); GetResponse getResponse = client.get(getRequest, RequestOptions.DEFAULT); System.out.println(getResponse); String index = getResponse.getIndex(); System.out.println(index); String id = getResponse.getId(); System.out.println(id); if (getResponse.isExists()) { long version = getResponse.getVersion(); System.out.println(version); String sourceAsString = getResponse.getSourceAsString(); System.out.println(sourceAsString); Map<String, Object> sourceAsMap = getResponse.getSourceAsMap(); System.out.println(sourceAsMap); byte [] sourceAsBytes = getResponse.getSourceAsBytes(); } else { } }

查询state=”AK”的文档:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 { "took" : 1 , "timed_out" : false , "_shards" : { "total" : 1 , "successful" : 1 , "skipped" : 0 , "failed" : 0 } , "hits" : { "total" : { "value" : 22 , "relation" : "eq" } , "max_score" : 3.7952394 , "hits" : [ { "_index" : "bank" , "_type" : "account" , "_id" : "210" , "_score" : 3.7952394 , "_source" : { "account_number" : 210 , "balance" : 33946 , "firstname" : "Cherry" , "lastname" : "Carey" , "age" : 24 , "gender" : "M" , "address" : "539 Tiffany Place" , "employer" : "Martgo" , "email" : "cherrycarey@martgo.com" , "city" : "Fairacres" , "state" : "AK" } } , .... ] } }

搜索address中包含mill的所有人的年龄分布以及平均年龄,平均薪资

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 GET bank/_search { "query" : { "match" : { "address" : "Mill" } } , "aggs" : { "ageAgg" : { "terms" : { "field" : "age" , "size" : 10 } } , "ageAvg" : { "avg" : { "field" : "age" } } , "balanceAvg" : { "avg" : { "field" : "balance" } } } }

java实现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 @Test public void searchData () throws IOException { SearchRequest searchRequest = new SearchRequest (); searchRequest.indices("bank" ); SearchSourceBuilder sourceBuilder = new SearchSourceBuilder (); sourceBuilder.query(QueryBuilders.matchQuery("address" ,"Mill" )); TermsAggregationBuilder ageAgg=AggregationBuilders.terms("ageAgg" ).field("age" ).size(10 ); sourceBuilder.aggregation(ageAgg); AvgAggregationBuilder ageAvg = AggregationBuilders.avg("ageAvg" ).field("age" ); sourceBuilder.aggregation(ageAvg); AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg" ).field("balance" ); sourceBuilder.aggregation(balanceAvg); System.out.println("检索条件:" +sourceBuilder); searchRequest.source(sourceBuilder); SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT); System.out.println("检索结果:" +searchResponse); SearchHits hits = searchResponse.getHits(); SearchHit[] searchHits = hits.getHits(); for (SearchHit searchHit : searchHits) { String sourceAsString = searchHit.getSourceAsString(); Account account = JSON.parseObject(sourceAsString, Account.class); System.out.println(account); } Aggregations aggregations = searchResponse.getAggregations(); Terms ageAgg1 = aggregations.get("ageAgg" ); for (Terms.Bucket bucket : ageAgg1.getBuckets()) { String keyAsString = bucket.getKeyAsString(); System.out.println("年龄:" +keyAsString+" ==> " +bucket.getDocCount()); } Avg ageAvg1 = aggregations.get("ageAvg" ); System.out.println("平均年龄:" +ageAvg1.getValue()); Avg balanceAvg1 = aggregations.get("balanceAvg" ); System.out.println("平均薪资:" +balanceAvg1.getValue()); }

可以尝试对比打印的条件和执行结果,和前面的ElasticSearch的检索语句和检索结果进行比较;

其他 1. kibana控制台命令 ctrl+home:回到文档首部;

ctril+end:回到文档尾部。

商品上架 spu在es中的存储模型分析 如果每个sku都存储规格参数,会有冗余存储,因为每个spu对应的sku的规格参数都一样

但是如果将规格参数单独建立索引会出现检索时出现大量数据传输的问题,会阻塞网络

因此我们选用第一种存储模型,以空间换时间

向ES添加商品属性映射 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 PUT product { "mappings" : { "properties" : { "skuId" : { "type" : "long" } , "spuId" : { "type" : "keyword" } , "skuTitle" : { "type" : "text" , "analyzer" : "ik_smart" } , "skuPrice" : { "type" : "keyword" } , "skuImg" : { "type" : "keyword" , "index" : false , "doc_values" : false } , "saleCount" : { "type" : "long" } , "hasStock" : { "type" : "boolean" } , "hotScore" : { "type" : "long" } , "brandId" : { "type" : "long" } , "catalogId" : { "type" : "long" } , "brandName" : { "type" : "keyword" , "index" : false , "doc_values" : false } , "brandImg" : { "type" : "keyword" , "index" : false , "doc_values" : false } , "catalogName" : { "type" : "keyword" , "index" : false , "doc_values" : false } , "attrs" : { "type" : "nested" , "properties" : { "attrId" : { "type" : "long" } , "attrName" : { "type" : "keyword" , "index" : false , "doc_values" : false } , "attrValue" : { "type" : "keyword" } } } } } }

商品上架接口实现 在SpuInfoController中添加商品上架功能的方法

1 2 3 4 5 6 7 8 9 @PostMapping("{spuId}/up") public R spuUp (@PathVariable("spuId") Long spuId) {spuInfoService.up(spuId); return R.ok();}

商品上架需要在es中保存spu信息并更新spu的状态信息,由于SpuInfoEntity与索引的数据模型并不对应,所以我们要建立专门的vo进行数据传输

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 @Data public class SkuEsModel { private Long skuId; private Long spuId; private String skuTitle; private BigDecimal skuPrice; private String skuImg; private Long saleCount; private boolean hasStock; private Long hotScore; private Long brandId; private Long catalogId; private String brandName; private String brandImg; private String catalogName; private List<Attrs> attrs; @Data public static class Attrs { private Long attrId; private String attrName; private String attrValue; } }

编写商品上架的接口

由于每个spu对应的各个sku的规格参数相同,因此我们要将查询规格参数提前,只查询一次

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 public void upSpuForSearch (Long spuId) { List<SkuInfoEntity> skuInfoEntities=skuInfoService.getSkusBySpuId(spuId); List<ProductAttrValueEntity> productAttrValueEntities = productAttrValueService.list(new QueryWrapper <ProductAttrValueEntity>().eq("spu_id" , spuId)); List<Long> attrIds = productAttrValueEntities.stream().map(attr -> { return attr.getAttrId(); }).collect(Collectors.toList()); List<Long> searchIds=attrService.selectSearchAttrIds(attrIds); Set<Long> ids = new HashSet <>(searchIds); List<SkuEsModel.Attr> searchAttrs = productAttrValueEntities.stream().filter(entity -> { return ids.contains(entity.getAttrId()); }).map(entity -> { SkuEsModel.Attr attr = new SkuEsModel .Attr(); BeanUtils.copyProperties(entity, attr); return attr; }).collect(Collectors.toList()); Map<Long, Boolean> stockMap = null ; try { List<Long> longList = skuInfoEntities.stream().map(SkuInfoEntity::getSkuId).collect(Collectors.toList()); List<SkuHasStockVo> skuHasStocks = wareFeignService.getSkuHasStocks(longList); stockMap = skuHasStocks.stream().collect(Collectors.toMap(SkuHasStockVo::getSkuId, SkuHasStockVo::getHasStock)); }catch (Exception e){ log.error("远程调用库存服务失败,原因{}" ,e); } Map<Long, Boolean> finalStockMap = stockMap; List<SkuEsModel> skuEsModels = skuInfoEntities.stream().map(sku -> { SkuEsModel skuEsModel = new SkuEsModel (); BeanUtils.copyProperties(sku, skuEsModel); skuEsModel.setSkuPrice(sku.getPrice()); skuEsModel.setSkuImg(sku.getSkuDefaultImg()); skuEsModel.setHotScore(0L ); BrandEntity brandEntity = brandService.getById(sku.getBrandId()); skuEsModel.setBrandName(brandEntity.getName()); skuEsModel.setBrandImg(brandEntity.getLogo()); CategoryEntity categoryEntity = categoryService.getById(sku.getCatalogId()); skuEsModel.setCatalogName(categoryEntity.getName()); skuEsModel.setAttrs(searchAttrs); skuEsModel.setHasStock(finalStockMap==null ?false :finalStockMap.get(sku.getSkuId())); return skuEsModel; }).collect(Collectors.toList()); R r = searchFeignService.saveProductAsIndices(skuEsModels); if (r.getCode()==0 ){ this .baseMapper.upSpuStatus(spuId, ProductConstant.ProductStatusEnum.SPU_UP.getCode()); }else { log.error("商品远程es保存失败" ); } }

商城系统首页 导入依赖 前端使用了thymeleaf开发,因此要导入该依赖,并且为了改动页面实时生效导入devtools

1 2 3 4 5 6 7 8 9 10 <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-devtools</artifactId > <optional > true</optional > </dependency > <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-thymeleaf</artifactId > </dependency >

渲染一级分类菜单 由于访问首页时就要加载一级目录,所以我们需要在加载首页时获取该数据

1 2 3 4 5 6 7 @GetMapping({"/", "index.html"}) public String indexPage (Model model) { List<CategoryEntity> catagories = categoryService.getLevel1Catagories(); model.addAttribute("catagories" , catagories); return "index" ; }

页面遍历菜单数据

1 2 3 <li th:each ="catagory:${catagories}" > <a href ="#" class ="header_main_left_a" ctg-data ="3" th:attr ="ctg-data=${catagory.catId}" > <b th:text ="${catagory.name}" > </b > </a > </li >

渲染二级三级分类菜单 首先创建一个VO类表示二级和三级菜单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 @Data @AllArgsConstructor @NoArgsConstructor public class Catelog2Vo { private String catalog1Id; private String id; private String name; private List<Catelog3Vo> catalog3List; @Data @AllArgsConstructor @NoArgsConstructor public static class Catelog3Vo { private String catalog2Id; private String id; private String name; } }

注意其中的catalog1Id属性和catalog2Id属性

1 2 3 4 5 @GetMapping("index/catelog.json") @ResponseBodye public Map<String, List<Catelog2Vo>> getCategoryJson () { return categoryService.getCategoryJson(); }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 @Data @AllArgsConstructor @NoArgsConstructor public class Catelog2Vo { private String catelog1Id; private String id; private String name; private List<Catelog3Vo> catelog3List; @Data @AllArgsConstructor @NoArgsConstructor public static class Catelog3Vo { private String catelog2Id; private String id; private String name; } }

修改resources/static/index下的catalogLoader.js文件中的访问路径为index/catelog.json

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 @Override public Map<String, List<Catelog2Vo>> getCategoryJson () { List<CategoryEntity> level1Categories = getLevel1Catagories(); Map<String, List<Catelog2Vo>> parent_cid = level1Categories.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> { List<CategoryEntity> categoryEntities = baseMapper.selectList(new QueryWrapper <CategoryEntity>().eq("parent_cid" , v.getCatId())); List<Catelog2Vo> catelog2Vos = null ; if (categoryEntities != null ) { catelog2Vos = categoryEntities.stream().map(l2 -> { Catelog2Vo catelog2Vo = new Catelog2Vo (v.getCatId().toString(), l2.getCatId().toString(), l2.getName(), null ); List<CategoryEntity> level3Catelog = baseMapper.selectList(new QueryWrapper <CategoryEntity>().eq("parent_cid" , l2.getCatId())); if (level3Catelog != null ) { List<Catelog2Vo.Catelog3Vo> collect = level3Catelog.stream().map(l3 -> { Catelog2Vo.Catelog3Vo catelog3Vo = new Catelog2Vo .Catelog3Vo(l2.getCatId().toString(), l3.getCatId().toString(), l3.getName()); return catelog3Vo; }).collect(Collectors.toList()); catelog2Vo.setCatalog3List(collect); } return catelog2Vo; }).collect(Collectors.toList()); } return catelog2Vos; })); return parent_cid; }

搭建域名访问环境 1. 正向代理与反向代理

nginx就是通过反向代理实现负载均衡

2. Nginx配置文件

nginx.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 user nginx; worker_processes 1; error_log /var/log/nginx/error.log warn; pid /var/run/nginx.pid; # event块 events { worker_connections 1024; } # http块 http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; sendfile on; #tcp_nopush on; keepalive_timeout 65; #gzip on; include /etc/nginx/conf.d/*.conf; }

/etc/nginx/conf.d/default.conf

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 # /etc/nginx/conf.d/default.conf 的server块 server { listen 80; server_name localhost; #charset koi8-r; #access_log /var/log/nginx/log/host.access.log main; location / { root /usr/share/nginx/html; index index.html index.htm; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} }

3. Nginx+Windows搭建域名访问环境

修改windows hosts文件改变本地域名映射,将gulimall.com映射到虚拟机ip

修改nginx的根配置文件nginx.conf,将upstream映射到我们的网关服务

1 2 3 upstream gulimall{ server 192.168.56.1:88; }

修改nginx的server块配置文件gulimall.conf,将以/开头的请求转发至我们配好的gulimall的upstream,由于nginx的转发会丢失host头,所以我们添加头信息

1 2 3 4 location / { proxy_pass http://gulimall; proxy_set_header Host $host; }

配置网关服务,将域名为.gulimall.com转发至商品服务

1 2 3 4 - id: gulimall_host uri: lb://gulimall-product predicates: - Host=**.gulimall.com

性能压测与优化 1. 压测工具与环境

注:简单业务仅返回一个字符串

压测内容

压测线程数

吞吐量/s

90%响应时间

99%响应时间

Nginx 50

6355

4

235

Gateway 50

14355

5

23

简单服务 50

27373

3

5

首页一级菜单渲染 50

252(db,thymeleaf)

241

316

首页菜单渲染(开缓存) 50

640

100

179

首页菜单渲染(开缓存、优化数据库、关日志) 50

1204

50

85

三级分类数据获取 50

5(db)

10132

10275

三级分类(加索引) 50

15(加索引)

3715

3871

三级分类(优化业务) 50

285

205

313

三级分类(redis缓存) 50

658

97

121

首页全量数据获取 50

2.5(静态资源)

34096

35168

首页全量数据获取(动静分类) 50

7

3977

5215

Gateway+简单服务

50

6200

13

34

全链路(Nginx+GateWay+简单服务)

50

1539

46

66

中间件越多,性能损失越大,大多都损失在网络交互了;

业务:

Db(MySQL 优化)

模板的渲染速度(缓存)

静态资源

2. 首页菜单渲染优化数据库 优化数据库前

1 2 3 4 5 6 public List<CategoryEntity> getLevel1Catagories () { long start = System.currentTimeMillis(); List<CategoryEntity> parent_cid = this .list(new QueryWrapper <CategoryEntity>().eq("parent_cid" , 0 )); System.out.println("查询一级菜单时间:" +(System.currentTimeMillis()-start)); return parent_cid; }

给parent_cid添加索引后

但是整体业务和吞吐量并没有优化,可能由于使用了远程数据库,通信时间较长?

3. 三级分类(优化业务) 优化前

对二级菜单的每次遍历都需要查询数据库,浪费大量资源

优化后

仅查询一次数据库,剩下的数据通过遍历得到并封装

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 @Override public Map<String, List<Catelog2Vo>> getCategoryJson () { List<CategoryEntity> selectList = baseMapper.selectList(null ); List<CategoryEntity> level1Categories = getParent_cid(selectList, 0L ); Map<String, List<Catelog2Vo>> parent_cid = level1Categories.stream().collect(Collectors.toMap(k -> k.getCatId().toString(), v -> { List<CategoryEntity> categoryEntities = getParent_cid(selectList,v.getCatId()); List<Catelog2Vo> catelog2Vos = null ; if (categoryEntities != null ) { catelog2Vos = categoryEntities.stream().map(l2 -> { Catelog2Vo catelog2Vo = new Catelog2Vo (v.getCatId().toString(), l2.getCatId().toString(), l2.getName(), null ); List<CategoryEntity> level3Catelog = getParent_cid(selectList,l2.getCatId()); if (level3Catelog != null ) { List<Catelog2Vo.Catelog3Vo> collect = level3Catelog.stream().map(l3 -> { Catelog2Vo.Catelog3Vo catelog3Vo = new Catelog2Vo .Catelog3Vo(l2.getCatId().toString(), l3.getCatId().toString(), l3.getName()); return catelog3Vo; }).collect(Collectors.toList()); catelog2Vo.setCatalog3List(collect); } return catelog2Vo; }).collect(Collectors.toList()); } return catelog2Vos; })); return parent_cid; } private List<CategoryEntity> getParent_cid (List<CategoryEntity> selectList, Long parent_cid) { List<CategoryEntity> collect = selectList.stream().filter(item -> item.getParentCid() == parent_cid).collect(Collectors.toList()); return collect; }

4. Nginx动静分类 由于动态资源和静态资源目前都处于服务端,所以为了减轻服务器压力,我们将js、css、img等静态资源放置在Nginx端,以减轻服务器压力

在nginx的html文件夹创建staic文件夹,并将index/css等静态资源全部上传到该文件夹中

修改index.html的静态资源路径,使其全部带有static前缀src="/static/index/img/img_09.png"

修改nginx的配置文件/mydata/nginx/conf/conf.d/gulimall.conf

如果遇到有/static为前缀的请求,转发至html文件夹

1 2 3 4 5 6 7 8 location /static { root /usr/share/nginx/html; } location / { proxy_pass http://gulimall; proxy_set_header Host $host; }

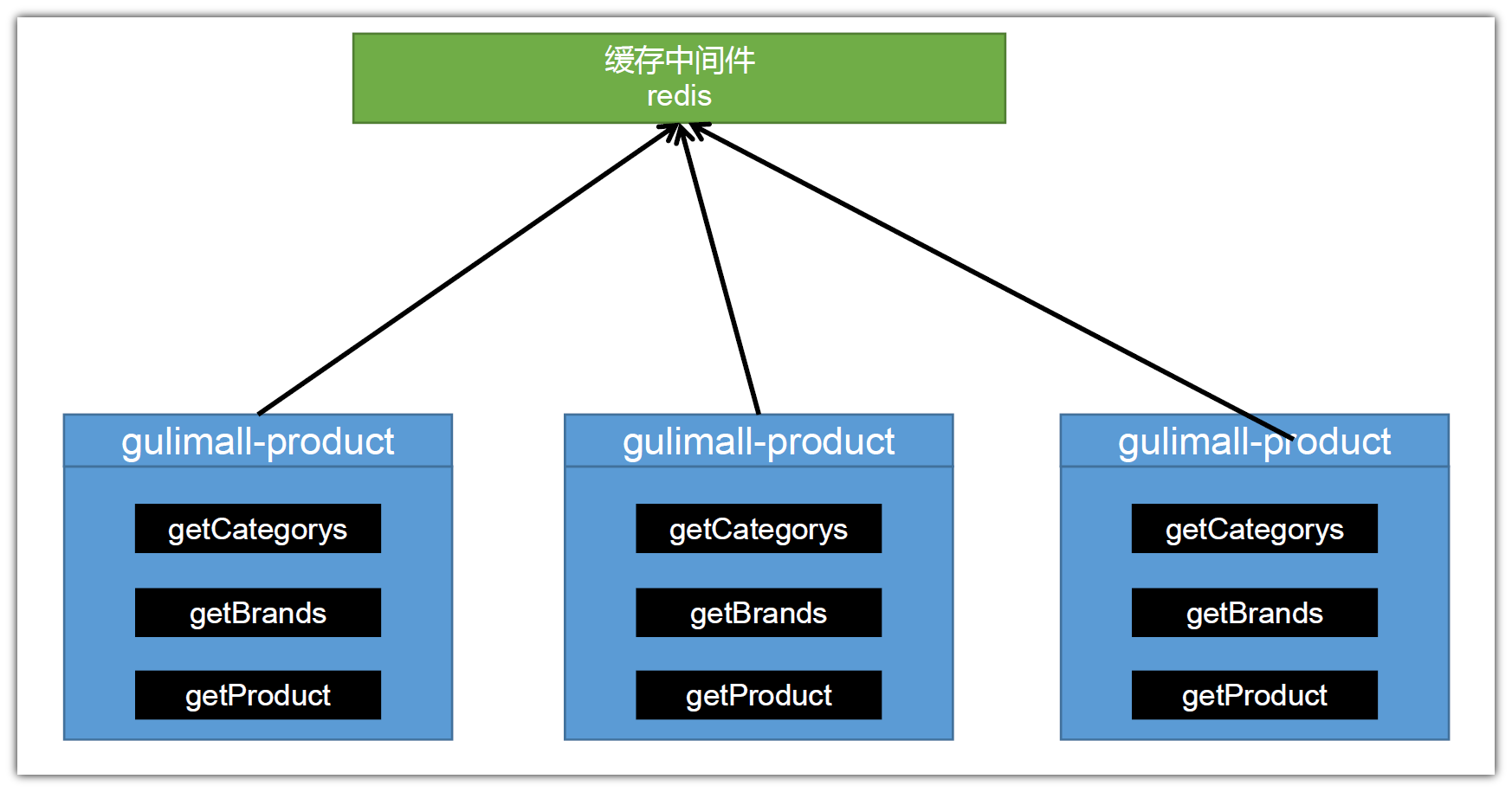

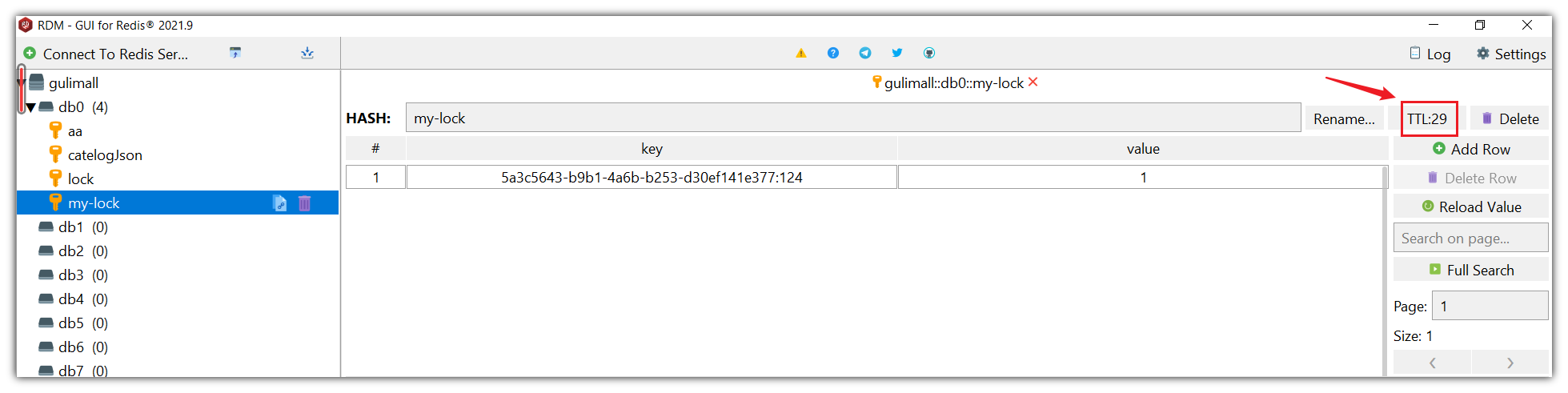

缓存 1. 本地缓存 1) 使用hashmap本地缓存 1 2 3 4 5 6 7 8 9 10 11 12 private Map<String,Object> cache=new HashMap <>();public Map<String, List<Catalog2Vo>> getCategoryMap () { Map<String, List<Catalog2Vo>> catalogMap = (Map<String, List<Catalog2Vo>>) cache.get("catalogMap" ); if (catalogMap == null ) { catalogMap = getCategoriesDb(); cache.put("catalogMap" ,catalogMap); } return catalogMap; }

2) 整合redis进行测试 导入依赖

1 2 3 4 <dependency > <groupId > org.springframework.boot</groupId > <artifactId > spring-boot-starter-data-redis</artifactId > </dependency >

配置redis主机地址

1 2 3 4 spring: redis: host: port: 6379

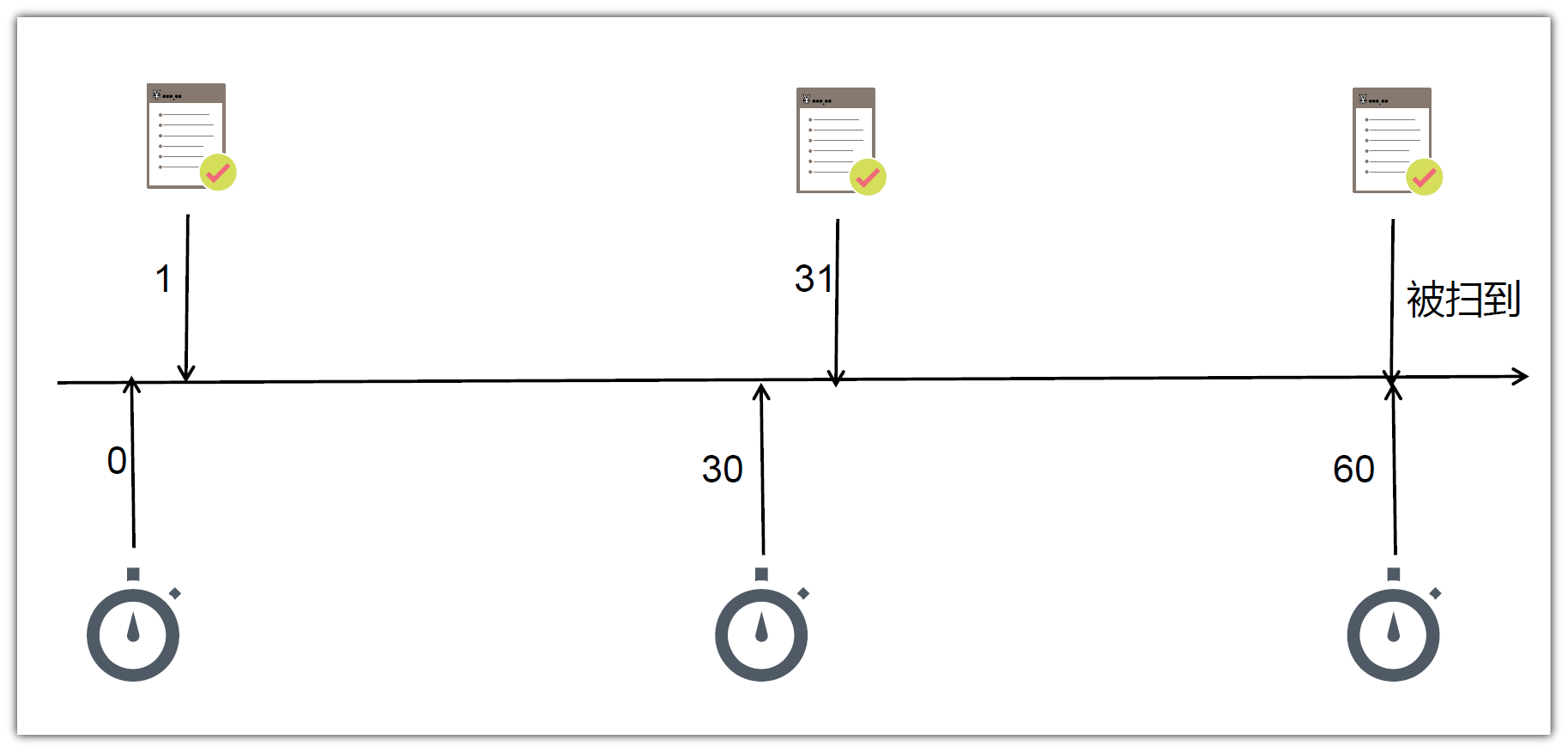

使用springboot自动配置的RedisTemplate优化菜单获取业务